EEG-based Decoding of Selective Visual Attention in Superimposed Videos

Abstract

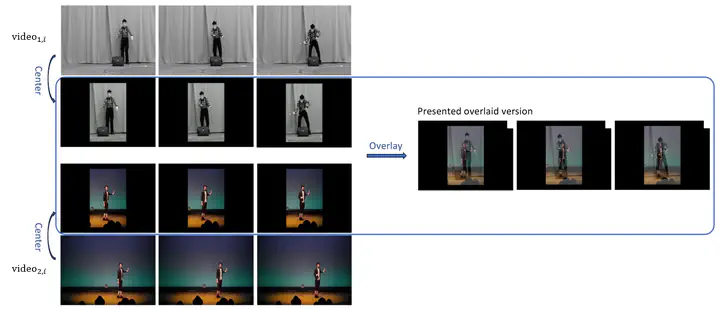

Selective attention enables humans to efficiently process visual stimuli by enhancing important elements and filtering out irrelevant information. Locating visual attention is fundamental in neuroscience with potential applications in brain-computer interfaces. Conventional paradigms often use synthetic stimuli or static images, but visual stimuli in real life contain smooth and highly irregular dynamics. We show that these irregular dynamics can be decoded from electroencephalography (EEG) signals for selective visual attention decoding. To this end, we propose a free-viewing paradigm in which participants attend to one of two superimposed videos, each showing a center-aligned person performing a stage act. Superimposing ensures that the relative differences in the neural responses are not driven by differences in object locations. A stimulus-informed decoder is trained to extract EEG components correlated with the motion patterns of the attended object, and can detect the attended object in unseen data with significantly above-chance accuracy. This shows that the EEG responses to naturalistic motion are modulated by selective attention. Eye movements are also found to be correlated to the motion patterns in the attended video, despite the spatial overlap with the distractor. We further show that these eye movements do not dominantly drive the EEG-based decoding and that complementary information exists in EEG and gaze data. Moreover, our results indicate that EEG may also capture neural responses to unattended objects. To our knowledge, this study is the first to explore EEG-based selective visual attention decoding on natural videos, opening new possibilities for experiment design.