Probabilistic Gain Control in a Multi-Speaker Setting using EEG-Based Auditory Attention Decoding

Abstract

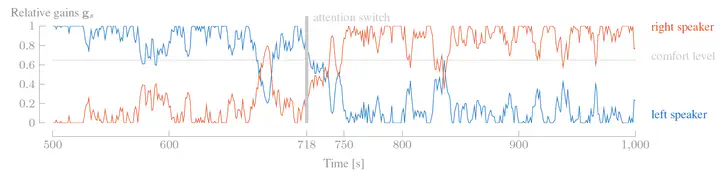

Current hearing aids have difficulties focusing on the correct speaker in complex environments where multiple people are talking simultaneously. This is because hearing aids do not know to which speaker the user aims to listen. A promising solution is to use so-called auditory attention decoding (AAD) algorithms, which infer the attended speaker based on brain activity recorded with, e.g., electroencephalography (EEG). AAD models decode on a window-towindow basis to which person a subject wishes to listen. However, only limited research has been done on how these AAD decisions can be converted into a gain control system that controls the volume of each competing speaker in a hearing aid. Existing gain control systems are either difficult to tune, unstable and/or not designed for use in situations with more than two competing speakers. We therefore propose a novel general purpose gain control system that can be easily used on any AAD model and in scenarios with an arbitrary number of speakers. We demonstrate that the gain control system is stable, even for AAD algorithms with very low accuracies, and even in scenarios with more than 2 speakers.